SLV Install - Kafka

SLV Install - Kafka

Why Kafka Cluster?

Apache Kafka provides durable, high-throughput event streaming that powers real-time data pipelines, distributed services, and analytics. Deploying a Kafka cluster with

slv install delivers consistent broker configuration, topic defaults, and observability hooks without maintaining bespoke scripts.Highlights

- Kafka: https://kafka.apache.org/

- Handles millions of messages per second with strong durability guarantees.

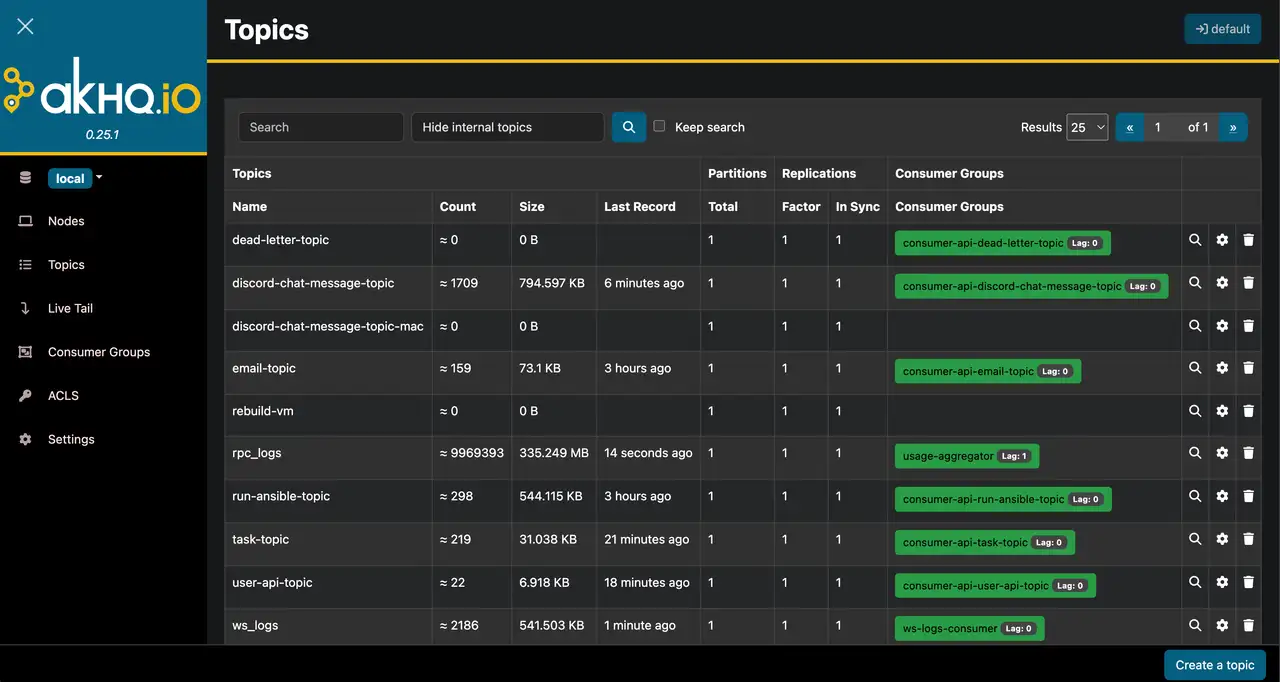

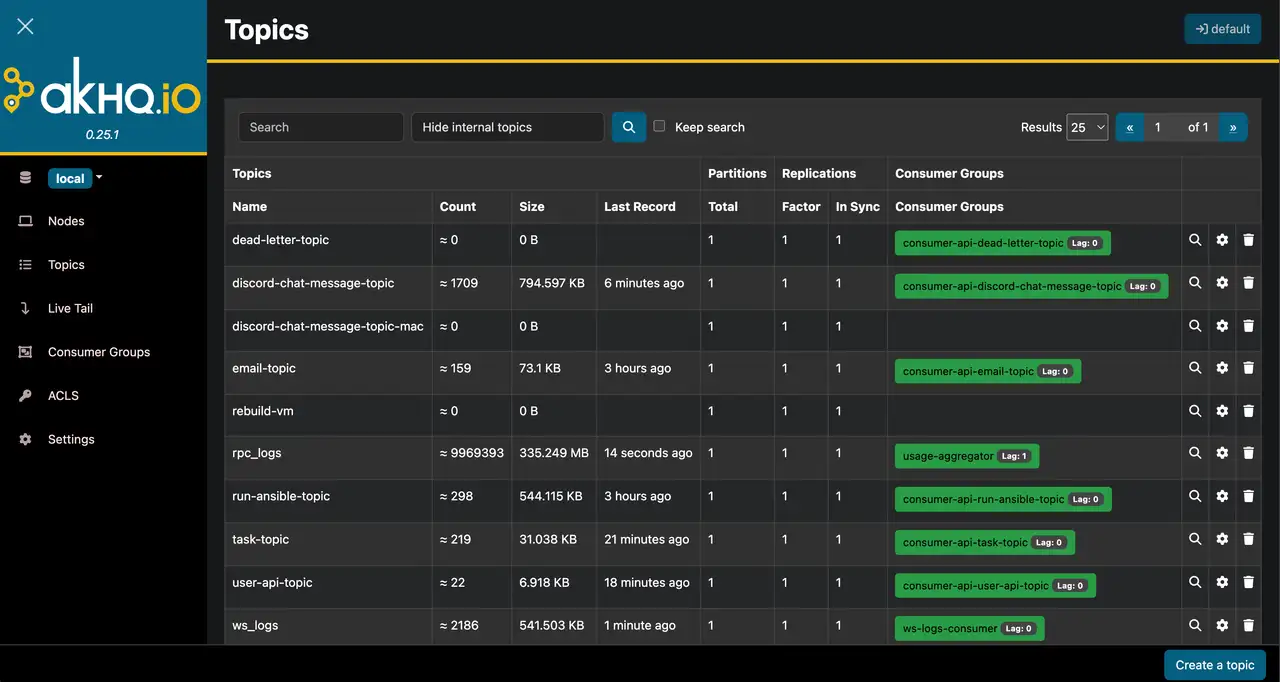

- Bundled with AKHQ UI for quick topic inspection, consumer lag monitoring, and debugging.

Run the Installer

Aim

slv install at the hosts that will run Kafka brokers and pick the Kafka Cluster option.Note: Replace1.1.1.1with the IP address of your own server.

Review the Details

Double-check the inventory and playbook path before moving forward. If you need to scope to specific hosts in your inventory, pass

--limit.Observe Ansible Execution

slv invokes Ansible to configure Kafka brokers, systemd services, and supporting components such as ZooKeeper (if required by your template).Verify the Endpoints

When the playbook finishes, the CLI prints the broker endpoint and AKHQ UI address so you can start publishing and inspecting messages immediately.

Dashboard

Access AKHQ at

http://1.1.1.1:5000 to browse topics, inspect partition lag, and manage consumer groups. Pair it with Grafana dashboards (for example, Kafka Exporter metrics) to complete your streaming observability stack.